‘Take a risk and keep testing, because what works today won’t work tomorrow, but what worked yesterday may work again’. These words by Amrita Sahasrabudhe, the Senior Vice President of Marketing at FastMed, echo profoundly within the domain of App Store Optimization (ASO), particularly when it comes to ASO AB testing.

Did you know that well-thought-out (and well-implemented) A/B testing results in conversion increase by a whopping 30%? Now, that sounds tempting. It’s no secret that because of this fact, ASO A/B testing is now an everyday activity of most marketers. Think of it as the top-level tool that helps you predict the future. After all, it’s A/B testing that helps you measure, tweak, and refine your app’s presentation for the purpose of boosting downloads.

In this post, you’ll find out:

- what exactly A B testing is;

- why A/B testing is so important;

- who usually performs ASO A B testing;

- about specific steps to follow when performing A/B testing;

- about mistakes to avoid along the way.

‘It doesn’t make any difference how beautiful your guess is, it doesn’t make any difference how smart you are, who made the guess, or what his name is. If it disagrees with experiment, it’s wrong.’ This quote from the brilliant book Experimentation Works: The Surprising Power of Business Experiments by Stefan H. Thomke introduces the perks and tools of experimentation practice in business — and so does this post. Stay tuned.

Table of Contents

What Is ASO AB Testing?

Let’s start from square one. After working up your first app store listing, some polishing and optimization are a must. Without a thorough analysis, estimating if adding more screenshots or changing your app icon will boost your downloads or not may be challenging. If you don’t want to risk the progress you’ve already made, mobile app split testing is your best choice at this point.

Also known as ASO split testing, it’s the process of running an experiment on elements within an app listing on the app stores for the purpose of comparing two or more versions of your app’s presentation and determining which one works best, conversion-wise.

Throughout this experiment, you keep your current version of the app store page and design another variation to test one particular element. After that, your existing app listing is displayed to one user group, and the new version will be displayed to another user group within your target audience. During the experiment, all these users are totally unaware of the testing. Therefore, they act absolutely naturally, allowing you to take your time and identify the best-performing version.

The ultimate purpose of ASO split testing is crystal clear: optimization of your app’s visibility, increasing user engagement and boosting downloads. Even though the main focus of ASO A B testing lies on conversion increase, the procedure comes with many more perks, such as the possibility to delve deep into marketing activity, analyze user segments and user experience, as well as evaluate the efficiency of new traffic channels. Mobile app A/B tests can be conducted either to optimize your store listing or to refine your presence within app store search results.

Here’s a quick roundup of your ‘split-testable’ app assets:

- Icon. In this case, the experiment comes down to testing different app icons to discover which one attracts more clicks and conveys your app’s essence effectively.

- Screenshot. It involves analysis of variations in the order, content, and design of your app’s screenshots to captivate users and tell a compelling story.

- Description. It’s about tweaking your app’s description to make it more informative, persuasive, and in sync with user expectations.

- Keyword. It suggests trying out various keywords to pinpoint the most effective ones that improve your app’s visibility in search results.

All in all, split testing allows you to determine if you need to adjust your product positioning or not, thus eliminating the guesswork from your ASO strategy. As a result, your ‘I think’ gets confidently and effectively replaced by the solid, professional ‘I know’.

10 Reasons Why Running A B Testing ASO Is Important

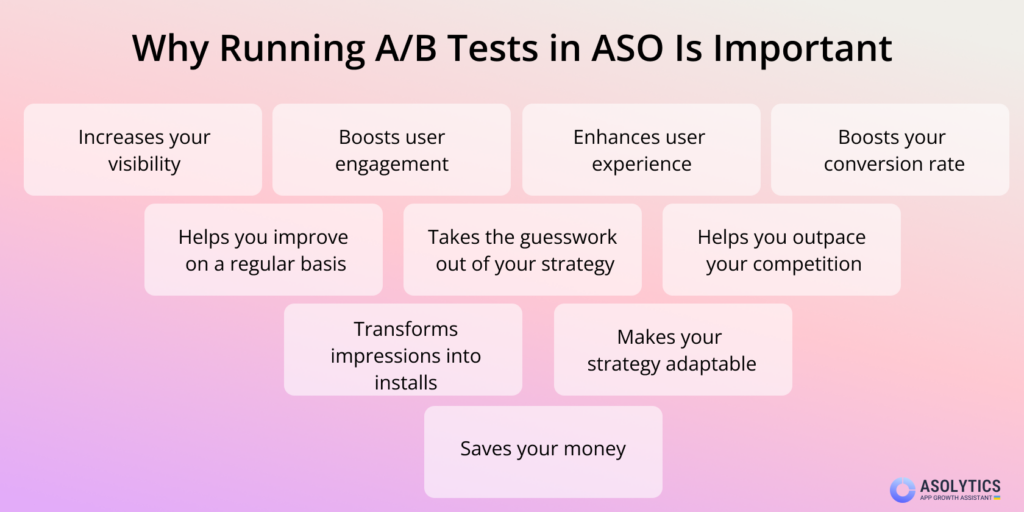

Did you know that over 97% of businesses say they run A/B tests? Oh yes, they have their solid reasons. We’ve rounded them all up in one handy 10-reason selection below:

- It increases your visibility

Improve your app’s visibility in app stores by experimenting with different elements to determine which combinations attract more potential consumers.

- It transforms impressions into installs

A/B testing helps you identify what resonates with your target audience, increasing download rates and driving more organic installs. - It boosts user engagement

Fine-tuning elements such as screenshots, icons, and descriptions can enhance user engagement. This, in its turn, results in longer retention and, what’s equally important, increased revenue. - It takes the guesswork out of your strategy

With solid A/B testing results at your fingertips, your decisions are based on the real preferences of your target audience. Therefore, you are 100% confident in your ASO course of action. - It enhances user experience

Test various user interface elements to create an intuitive and even joyful experience for your app’s audience. - It helps you outpace your competition

By staying agile and continuously optimizing your app’s store page, you can keep up with and even outperform competitors. - It boosts your conversion rate

Via proper A/B testing, you can discover which elements encourage users to take desired actions, whether it’s downloading, subscribing, or making in-app purchases. - It saves your money

Resultative A/B testing can help you allocate marketing budgets more efficiently by focusing on what works best. - It makes your strategy adaptable

Adapt rapidly to shifts in user behavior, mobile game industry trends, and algorithm updates by fine-tuning your app’s presentation. - It helps you improve on a regular basis

A/B testing is a continuous process, allowing you to constantly enhance your app’s store page and keep it fresh and appealing to users.

Now that you’re aware of each and every reason why you should be A/B testing, let’s discuss if you need to be a tech genius to get the procedure going.

Who Usually Performs Mobile A/B Testing

A/B testing in App Store Optimization is a powerful tool, but it’s not exclusive to rocket scientists or tech gurus. Anyone with a mobile app can (and should) dive into this game. Here’s a quick guide to help you master ASO A/B testing, regardless of your background or experience:

- App owners and marketers

App creators, product managers, and marketing teams should lead the charge. They have the most vested interest in optimizing the app’s performance. - Independent developers

Even solo app developers can benefit from A/B testing. A/B tests can uncover a treasure trove of valuable data that leads to increased downloads and revenue. - ASO tools and platforms

Use Asolytics tools on a regular basis for maximized results. The platform will simplify the testing process for you and provide priceless insights.

- Freelancers and ASO specialists

Hire professionals if you lack the time or expertise. A B testing ASO specialists can create and manage the required tests to maximize your app’s potential.

Here are some quick, must-follow tips if you want to take your A/B testing for mobile apps to the next level:

- Start Simple. Begin with fundamental elements like icons, screenshots, and titles before diving into more complex tests.

- Set Clear Goals. Define specific objectives for each test, such as increasing downloads or improving user retention.

- Monitor Metrics. Track key metrics like increase app conversion rate and user engagement to assess test performance.

- Adjust and Repeat. Don’t be afraid to run multiple tests and continually refine your app’s store page. What works today may not work tomorrow, remember?

A/B Testing for Mobile Apps: 9 Vital Steps to Follow

There are several phases to go through when performing your mobile split tests. Don’t neglect either of them, and keep reading to get to grips with how these phases work. They are reflected in a recommendation-packed 9-step guide provided by our experts below:

- Research. Research. Research

At this initial stage of your path, you’re going to need to determine what’s missing in your app store listing or what could be enhanced. If this task seems challenging, seek inspiration from the following recommendations:

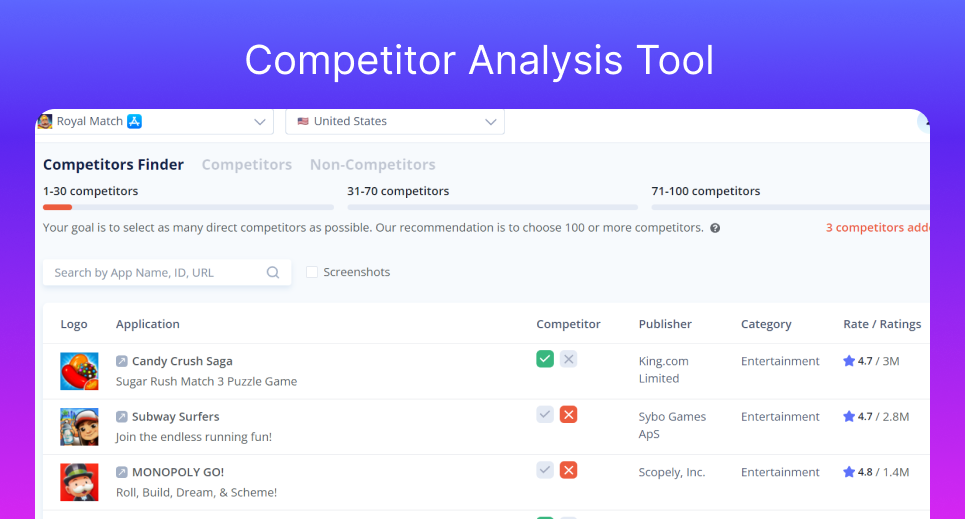

- Monitor Your Competitors. Use Asolytics to analyze successful competitor apps in your niche and incorporate proven strategies into your A/B tests.

- Assess Your Category. Broaden your research by analyzing apps within your category. This will help you find refreshing ideas to implement and still follow your category rules.

Expand Your Horizons. Think beyond app stores. Explore other types of media that your potential consumers fancy. For instance, the Space Sheep Games team, in their quest for new game screenshots, found inspiration from the MTV reality show ‘Ex on The Beach.’ Upon utilizing this concept to craft new visuals, their experiment unveiled a substantial boost in conversions, ranging from 45% to 73%.

Use the Competitors tool in Asolytics to find all the similar apps in your niche

Bonus Tip. At this stage, you can use mobile A/B testing to pinpoint the most effective ad channels for driving quality traffic. By launching campaigns across various networks but targeting the same audience, you gain insights into which ad channels yield the highest converting visitors. This strategic approach empowers you to allocate your ad budget with precision.

- Identify your goal & establish a hypothesis

You need a clear objective, whether it’s about mobile game influencer marketing or mobile app split testing. In your case, is your goal to increase downloads, improve user engagement, boost in-app purchases, or enhance your conversion rate?

Now, let’s dwell into what a hypothesis is. A/B-testing-for-ASO-wise, a hypothesis is a prediction you create before conducting your experiment. It should clearly state what is being improved, what you believe the result will be, and why you think so. Conducting your A/B tests will either prove or disprove your hypothesis. Bear in mind that hypotheses are bold statements, not vague questions.

Based on the Space Sheep Games example provided above, a good hypothesis is something like: ‘My goal is optimizing my screenshots to boost conversion. My potential consumers watch reality shows like ‘Ex on the Beach’. If we come up with game screenshots using this show as an inspiration, my conversion rate from the app store visitor to app download will skyrocket.’

- Choose a variable

Select a single element to test, such as the app icon, screenshots, app title, description, or keywords. Do not focus on more than one variable. This will guarantee clear and effective results.

Here are useful tips from our experts to guide you:

- Prioritize Based on Goals. Choose the variable that aligns most closely with your current ASO goals, whether it’s improving click-through rates, enhancing conversion rates, or increasing downloads. For instance, if you aim to increase click-through rates, testing different screenshots for visual appeal might be the way to go.

- Maintain Clarity. Ensure that the variable you select is distinct and easily distinguishable, making it simpler to analyze the results. For example, if you’re testing screenshots, ensure each version conveys a distinct message or feature.

- Practice Sequential Testing. Once you’ve tested one variable, proceed to the next, keeping a systematic approach to optimization.

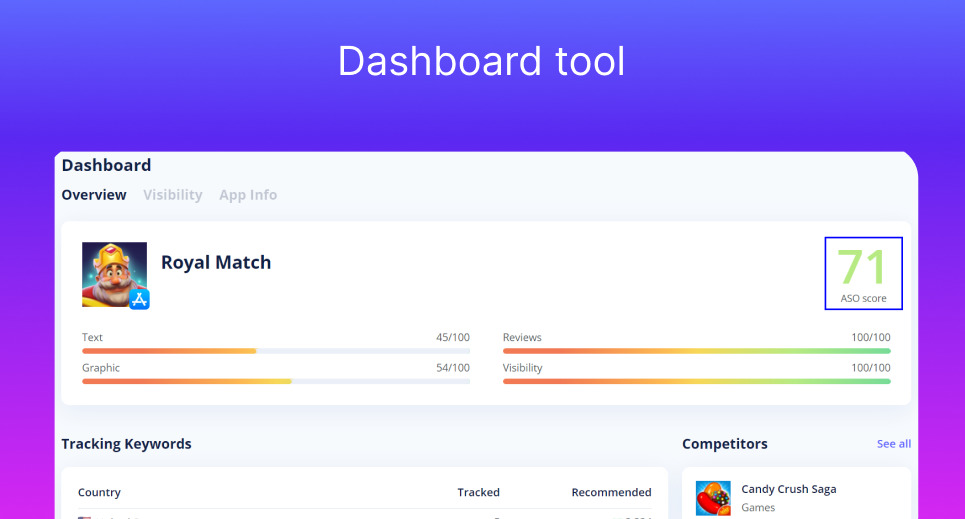

You can get an evaluation of how well your text and visual assets are adapted to the store in your app’s Dashboard

- Create variations

Develop alternative versions of the chosen variable. For instance, if you’re testing the app icon, design two different icons. Ensure they are distinct to provide meaningful insights.

Here are some vital aspects to consider when creating effective variations:

- Visual Elements. If you’re testing app icons, consider variations in design, color, or style. For instance, you could create one icon with a vibrant color scheme and another with a more muted palette.

- Textual Content. When experimenting with app descriptions, vary the tone, content, or structure. For instance, create one description emphasizing user benefits and another focusing on key features.

- Screenshot Stories. Test different narratives or angles in your screenshot order or captions. For example, arrange screenshots to showcase the app’s features first, and in the second version, highlight user testimonials.

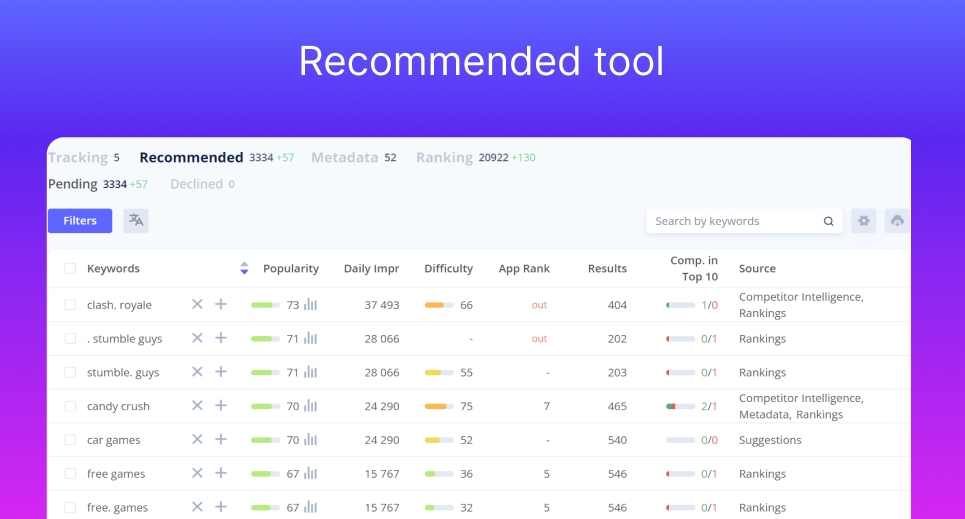

- Keyword Tweaks. If your A/B test involves keywords, alter the phrasing or placement. For instance, modify the primary keyword’s position within the title.

To collect keywords for A/B tests, use the Recommended tool in Asolytics

- Split your audience

Randomly divide your audience into two groups: the control group (A) and the variant group (B). The control group experiences the current version, while the variant group sees the new version.

Below, you’ll find some key takeaways from our experts to help you cope with this task:

- Random Selection. Ensure a random and unbiased division of your audience into control and variant groups. This prevents selection bias and maintains the test’s integrity.

- Equal Sample Sizes. Aim for equal group sizes to ensure a fair comparison between your control and variant versions.

- Targeted Geographies. If your app caters to a global audience, consider segmenting your audience by geographic regions to account for cultural differences and preferences.

- Time-Based Segmentation. Depending on your app’s usage patterns, you may choose to segment users based on the time they use the app, ensuring you capture data across various usage scenarios.

- User Behavior Profiles. Segmenting users based on their behavior within the app, such as new users versus returning users, can provide vital insights into how different audiences respond to your changes.

For example, if you’re testing a new app icon, ensure that both control and variant groups consist of users from diverse locations, time zones, and usage patterns. This way, your A/B test results can offer a comprehensive view of how different user segments react to the change.

- Run the experiment

The implementation stage is a crucial moment in A/B testing for ASO. Follow these expert tips for a buttery-smooth procedure:

- Practice Consistent Timing. Launch both control and variant versions simultaneously to minimize external variables. For instance, if you’re testing the app icon, ensure both versions go live on the app store at the same time.

- Implement Equal Exposure. Ensure that both versions receive an equal and random distribution to avoid biases. For a screenshot test, allocate the same exposure time to each variant.

- Monitor Like a Pro. Establish a monitoring system to track the test’s progress and any unexpected issues. Use tools like App Store Connect or Google Play Console for real-time data tracking.

- Preserve a Uniform User Experience. Throughout the test, maintain a consistent user experience by avoiding abrupt changes that could confuse or deter users. For a description test, keep the core message consistent while adjusting other details.

To effectively measure ASO performance and make data-driven decisions, it’s imperative to conduct A/B testing on various elements of your app store listing.” There’s an unbreakable rule in ASO A/B testing: the experiment should never conclude before 7 days. That way, your test spans weekdays and weekends, capturing diverse user behaviors. Ideally, mobile A/B tests should span a duration of 7 to 14 days.

- Monitor and collect data. Analyze results. Draw conclusions

Here’s a breakdown of the aspects that are vital throughout this stage:

- Continuous Surveillance. Regularly check key metrics like click-through rates, conversion rates, and user engagement during the entire test period.

- Data Sources. Utilize the most effective tools for data collection and analysis.

- Statistical Significance. Ensure you collect enough data to achieve statistical significance. For instance, if you’re testing a new app title, track how each version performs over several days or weeks to make meaningful comparisons.

- Early Anomalies. Keep an eye out for unexpected data variations. If a particular variant experiences an unusual spike in downloads, investigate to understand the cause.

After the test period, compare the performance of the control and variant groups. Determine which version yielded better results in alignment with your goal. Based on your analysis, draw conclusions about the impact of the changes. Decide whether to implement the winning version or continue testing.

When the results indicate that the new variation leads by under 2%, consider carefully before proceeding with store listing updates. To achieve a noteworthy impact, aim for an advantage of at least 3% on the winning variation.

Bonus Tip. If the result shows that your hypothesis was wrong, don’t get too discouraged. Remember why you did an A/B test in the first place? To avoid mistakes that would take your conversion rates from hero to zero.

- Implement changes

Now that you’ve analyzed your A/B test results, it’s time to translate insights into action. Here are key tips for this crucial step:

- Prioritize High-Impact Findings. Focus on changes that promise the most significant improvements, such as adopting a new app icon that boosted conversion rates by 10%.

- Maintain Consistency. While making changes, ensure your brand’s visual and messaging consistency. For instance, if you’re adjusting the app description, make sure it goes well with the overall brand tone and style.

- Test Gradual Changes. If significant changes are needed, consider gradual implementation to monitor user reactions. For example, if your A/B test recommended an entirely new title, transition to it over a few weeks.

- Track and Assess. Continuously monitor the performance after implementing changes. If, for instance, you changed your screenshots, keep an eye on user engagement metrics to ensure sustained improvement.

- Improve your ASO strategy based on received insights and don’t stop testing

You know that ASO is a never-ending journey, much like a smartphone in the hands of a teenager — always evolving, constantly tweaking, and forever in pursuit of the next big trend! So at this stage, feel the power of your experiment — and get ready for a new one! There’s always room for improvement, which means your A/B tests will go on and on. Furthermore, the app stores’ rules are constantly changing, a slew of new apps are released on a daily basis, and some competitors are as unpredictable as a kangaroo’s morning workout routine. Therefore, if you want to keep your market share and profits higher than the others, you need to always stay updated.

ASO AB testing: The Don’ts

If you want your A/B tests to bring solid results, do your best to avoid these mistakes:

- Testing Too Many Variables. Focusing on multiple variables simultaneously can make it challenging to identify which changes led to improved results. Stick to one or two variables per test, such as app icons or titles, to maintain clarity.

- Short Test Durations. Running tests for too short a time frame can lead to unreliable results. For instance, if you terminate a test within a couple of days, you may not capture the full spectrum of user behavior, especially considering variations over weekdays and weekends. Remember the 7-day rule we’ve discussed above? Follow it religiously.

- Ignoring User Segmentation. Not segmenting your audience based on relevant criteria like user behavior or demographics can obscure insights. For instance, a change might have a positive impact on new users but a negative one on returning users.

- Ignoring External Factors. Neglecting to account for external influences, such as mobile game marketing campaign or seasonality, can skew results. An example would be attributing an increase in downloads solely to a new app icon, when it was influenced by an ongoing marketing campaign.

- Inadequate Sample Sizes. Running tests with insufficient sample sizes can produce unreliable results. For example, if you only test your changes with a small fraction of your user base, your findings may not be representative.

Wrapping It All Up

In our comprehensive post, we’ve shed some guiding light on the exceptional importance of knowledge-based and A/B-tested decision-making when it comes to App Store Optimization. As Albert Einstein once wisely said, ‘The only source of knowledge is experience’. In the world of mobile app marketing, A/B testing is the perfect gateway to that knowledge.

Armed with the tips and tools provided in this post, you are now free to build a successful ASO strategy, improve your app on a regular basis, as well as boost its visibility and performance. So Research, Test, Analyze, Repeat — and have fun along the way!

To stay ahead in the competitive app market, regularly checking our ASO blog for the latest strategies and updates on A/B testing is invaluable for developers and marketers alike.

FAQ

What is A/B testing for ASO?

It’s a method to compare and optimize various elements of a mobile app’s store listing, such as icons, titles, descriptions, or screenshots, by testing multiple variations to determine which performs best in attracting and retaining audiences. It involves dividing the audience into two groups, one exposed to the original version and the other to a variation.

Why do A/B testing for ASO?

It helps you effectively optimize your app store listing. By comparing different versions of one element, you can identify what users fancy. Upon making the necessary improvements, you achieve better visibility, increased downloads, and enhanced user engagement. All of this is paramount for app success.

What are the main steps of split testing for ASO?

The main steps involve selecting an app store listing element to test, creating variations, splitting the audience into control and variant groups, implementing the test, monitoring and collecting data, analyzing the results, drawing conclusions, and then implementing changes or repeating based on the findings to continually enhance app performance.

What are the major mistakes to avoid during A/B testing for ASO?

You don’t want to test too many variables simultaneously, run tests for short durations, neglect statistical significance, ignore segmenting the audience, neglect external factors, ignore documenting changes, or use inadequate sample sizes. These pitfalls can lead to unreliable results and ineffective optimizations.